When a conference peer review process breaks down, it’s not a pretty sight. Here’s how to keep yours motoring on.

The peer review process is ultimately about asking the experts in a field to vindicate the quality of a piece of work and to help shape it for publication. Despite the well-documented flaws in peer review, it’s still the dominant method of identifying the most significant, novel, rigorous and original work in research.

What is Peer Review?

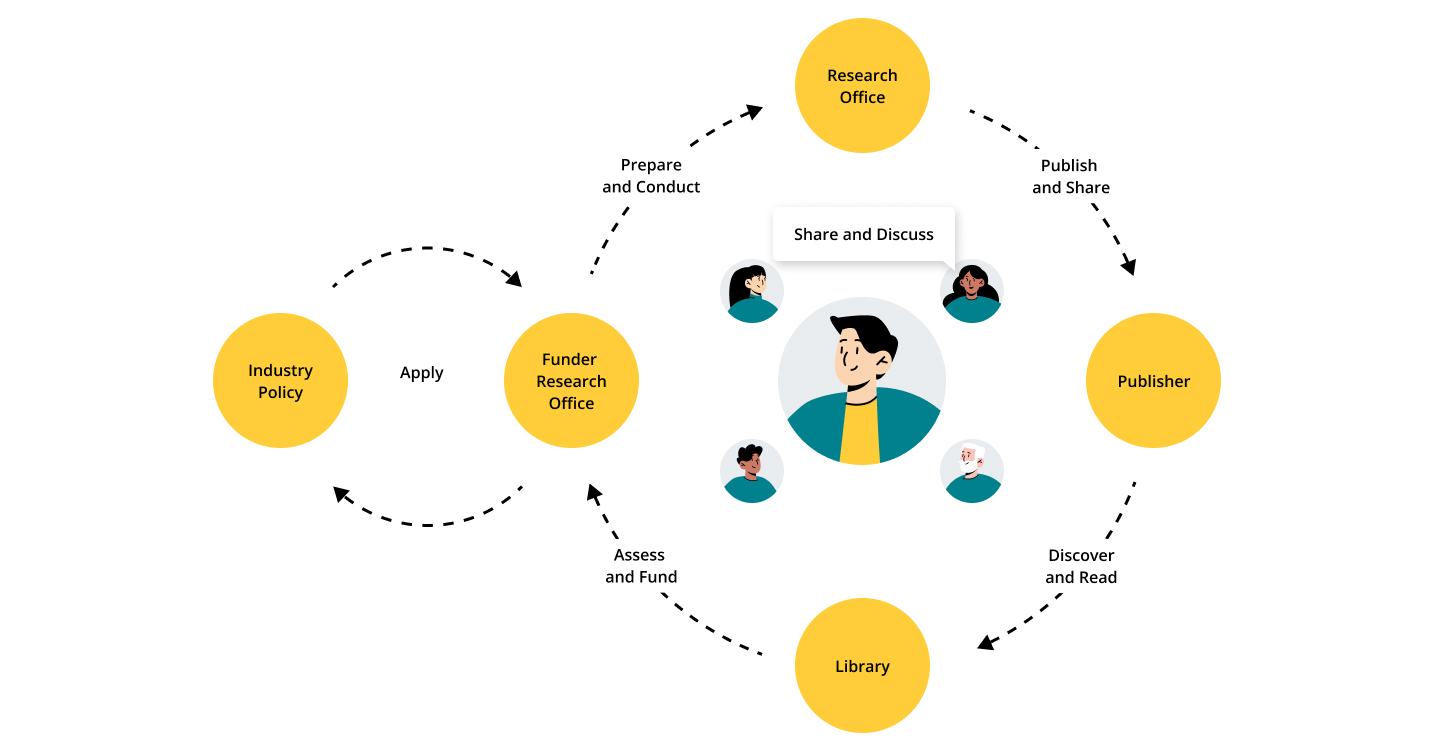

Research Lifecycle

It’s widely recognised that the majority of researchers undertake various roles throughout their scholarly journey. What is meant by the scholarly lifecycle? The scholarly lifecycle refers to the journey that a researcher embarks on, guiding their ideas from conception to experimentation, then through publication and discovery, evaluation, and extending beyond to real-world impact. Peer review plays an important part in this process both at the conference level and at the journal level.

The diagram above shows the research lifecycle with researchers, research communities and organisations that reflect them like scholarly societies at the centre.

Conference peer review vs. journal peer review

When you’re designing your conference’s peer review process, it’s helpful to be aware of some of the important differences between conference peer review and journal peer review.

Research submitted to a journal will often be re-drafted and polished many times over, whereas an abstract submitted to a conference may be a little more rough-hewn. This is because conference proceedings reflect the most up-to-date research in your field. For this reason, reviewing for a conference can be incredibly rewarding, as it serves to keep your reviewers aware of emerging trends in their discipline. And while it may not come with the same prestige as reviewing for a journal, your reviewers will be assessing work that’s right on the expanding frontier of their field.

When it comes to choosing your conference’s method of peer review, your organising committee will also likely enjoy more freedom than the editorial board of a traditional journal. (For example, this computer science conference couldn’t decide between single-and double-blind peer review, so tried both.) Which means that reviewing for your conference also potentially offers reviewers the chance to partake in more diverse types of peer review than they might experience at a journal.

That being said, overseeing your conference peer review process requires careful navigation. Typically spanning a few intense weeks, conference peer reviews entail reviewers evaluating multiple submissions, demanding a significant time investment, often culminating in a firm deadline, particularly when aiming for camera-ready submissions to meet print deadlines.

So this means that any weaknesses in your peer review process are magnified, as your reviewers will come up against them multiple times. And a poorly designed conference review process can result in unhappy reviewers who fail to deliver quality reviews, withdraw their offers, or simply stop responding to your increasingly desperate emails…

What happens when the review process breaks down

When a conference review process breaks down, reviewers will fail to provide the quality feedback that’s needed. And so high-quality submissions will be unfairly rejected (or sub-standard ones will be accepted), authors won’t get the feedback they need to improve, and the resulting conference proceedings won’t be a showcase of the latest and greatest research in your field. In short, without your reviewers bring onside, you don’t have a research conference.

So it’s always astounding when a conference organiser acts in ways that frustrate or annoy their reviewers. Need an example? We once worked with an organiser who, against all our advice, allocated 120 submissions to each of their reviewers. I repeat: one hundred and twenty submissions. (Spoiler: The review process didn’t go too well…)

So instead of ruffling your reviewers’ feathers, use the following tips to ensure your conference has a robust peer review process.

1. Don’t overload your reviewers

It’s really important that you invite enough reviewers for your conference. I can’t overstate how important this is. If you don’t invite enough reviewers, you’ll end up overloading the ones you have. Overloaded reviewers are not happy reviewers. And unhappy reviewers tend to withdraw their offer to review or go AWOL entirely. When they do, you’ll be left scrambling to replace them or off-loading their submissions onto other reviewers. Either way, there’s a good chance your conference peer review process will be compromised.

How to work out how many reviewers you need

Use the steps below to work out how many reviewers your conference needs, before you send a single invite.

- First, consider how long it’s likely to take someone to review a single submission. (A paper generally takes around 4-6 hours, an abstract can take around 30 minutes.)

- Then, decide how many hours work it’s fair to ask of your reviewers.

- Divide the hours of work by the time it takes to complete one review.

- This will give you the maximum number of submissions you should allocate to each reviewer. Mighty stuff.

- Next, consider the total number of submissions you’re expecting to receive.

- Multiply the total number of submissions by how many reviews each submission needs. (Two is usually the minimum, but three reviews means you’ll have a swing vote if two reviewers disagree.)

- You now have the number of individual reviews your conference needs. Phew.

- Now, divide the number of individual reviews by the maximum number of submissions you should allocate to each reviewer. (Point 4, if I’ve lost you.)

- Congratulations, you now know the number of reviewers you need. But you’re not done just yet…

- Finally, add around 15% to the above number. Reviewers will decline or go missing, topics won’t be equally spread, nepotism or conflicts of interest may be an issue. Plan for reviewer drop-off now, rather than further down the line.

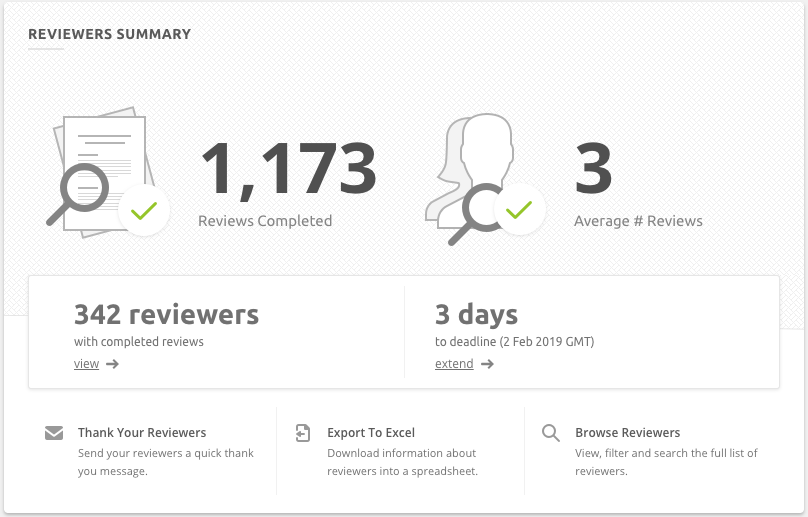

(Psst…Don’t like doing mental arithmetic? We’ve made a magic reviewer calculator to help you work this out.)

2. Set realistic review deadlines

Your reviewers are busy people with full-time jobs (and lives, even). One fantastic way to get on their bad side is to create review deadlines they can’t possibly meet. Maybe you had a lot of late submissions, which pushed your review timeslot back, but you still need to get acceptance letters to successful authors by an unmovable date. “I’ll just cut the review deadline by a week or so”, you think.

Nope.

It’s nigh impossible to precisely plan every conference deadline. But this isn’t an excuse to put your reviewers under pressure with an unfair review deadline. Your reviewing process will take place on top of their existing workload (which, let’s face it, is probably overloaded as it is) so you need to keep this in mind when you’re designing it.

How to build a robust submission and review timeline

The vast majority of conference submissions come in around deadline day, so plan for this in advance. Create public and private submissions deadlines (a week or two apart) to give yourself some buffer time. Advertise the public deadline in your call for papers, but use the private deadline as the jumping off point for beginning your peer review process. This way you can accept your late submissions without affecting when the review starts.

Then, recognise that the deadline you give your reviewers will slip. Most of the conferences we work with give reviewers four weeks to complete their reviews. It’s completely normal for this to run over by a week or two. So make sure you allow some buffer time between your review deadline and your next immovable date, whether it’s sending your book of proceedings to the printers or building your conference’s mobile app. Top tip: Find a platform that can sync your programme right into your mobile app in one click like Ex Ordo can.

3. Respect reviewers’ expertise when allocating submissions

A surefire method to frustrate your reviewers is by assigning them submissions in topics they did not consent to review and lack expertise in. Evaluating work in an unfamiliar subject restricts reviewers to appraising solely the argument’s quality and writing, as they lack the qualifications to assess its technical validity or relevance.

When an author’s expertise far exceeds a reviewer’s, your review process is violated. Reviewers are forced to give superficial reviews and authors don’t get a fair assessment, which is incredibly frustrating for both sides. This practice also removes reviewers’ opportunity for engagement with the cutting-edge research within their particular topic.

How to allocate relevant submissions to reviewers

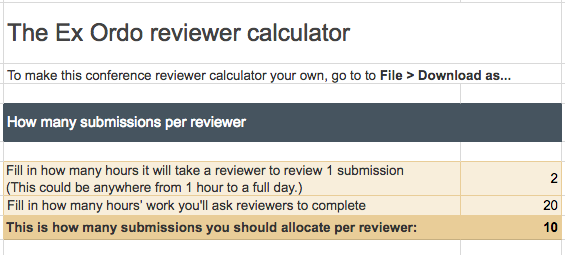

Any good peer review software will help you identify relevant reviewers

At the roots of an efficient peer review process is the relevant allocation of submissions to reviewers. So how do you build this allocation?

When you open your call for papers, include a list of topics that would-be authors can submit their work under. Then ensure your authors include this categorisation when they submit their research (make this stipulation crystal clear in your guide for authors, or make it a required step in your abstract management software).

When extending invitations to your reviewers, provide them with a list of topics and request their preferences for review. This enables you to monitor areas requiring more reviewers and ensures that when assignments are made, you can easily recognise and honour their expertise. Additionally, offer reviewers the option to decline certain submissions while accepting others, providing an added layer of assurance.

4. Don’t create a baffling marking scheme

If you really want to put your reviewers on the backfoot, create a marking scheme that’s an assault on common sense. It should baffle and confuse at every turn. Extra points if you can:

- Make your instructions vague.

- Go into detail where it’s not needed, and skimp on detail where it is.

- Leave room for ambiguity at every stage.

- Bonus points if your setup leaves reviewers unsure if they’ve recommended a submission be accepted or rejected.

A clear, straightforward, usable marking scheme is the basis of any successful peer review process. Without one – and a clear role for your reviewers within it – you’ll be asking them to spend unnecessary amounts of time trying to interpret your instructions or guess at what you want of them. Instead of actually reviewing.

How to outline a clear and simple marking scheme

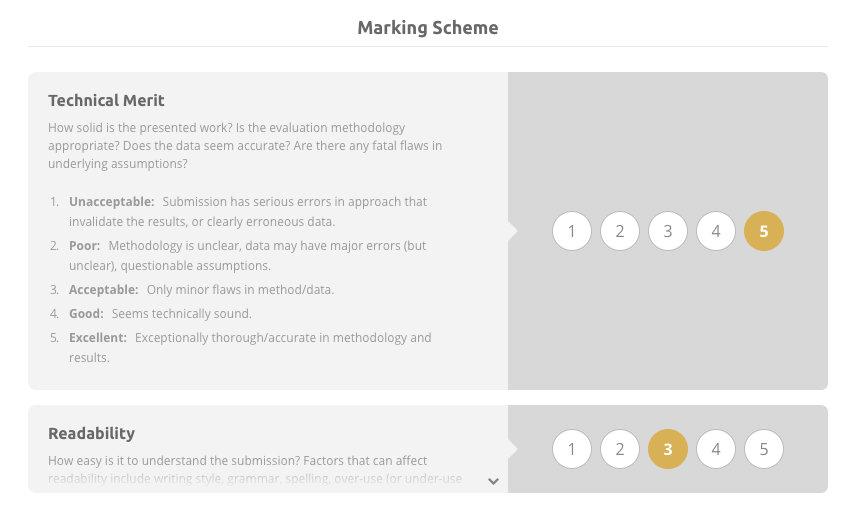

An example of a clearly laid out marking scheme

Do you want reviewers simply to indicate whether or not a submission should be presented at your conference? Or should they categorise it into oral, poster, workshop formats? Do they need them to give detailed commentary? Or should they give individual scores for categories like technical merit or readability? This should all be clear from your marking scheme.

To ensure you’re giving reviewers all the info they need, outline your marking scheme (and any descriptions included within) as early as you can. Then send it to reviewers when inviting them to review so they’re clear on what areas they’ll be asked to critique.

Then, before you begin your review process for real, round up some testers amongst your committee or volunteers. Ask them to complete a test review and give you feedback on how it went. Were they confused by anything? Getting feedback on any sticky issues now will save your reviewers (and you) pain later on down the track.

5. Create a simple reviewing experience

If you’re planning to ask reviewers to complete a lengthy scoring sheet, and write a summary of the submission, then give detailed feedback to the authors, plus write in-depth feedback to the conference chair, just…hang on a second.

Remember how long you’ve estimated it’ll take each reviewer to review one submission? How much of that will reviewers have to spend on the act of physically entering each review into your online peer review software or Google form? This might not seem like a big deal if people are reviewing one or two submissions, but if your reviewers are working through a longer list, any time they spend recording their reviews is time they may not give to completing more of them.

How to create a simple (and quick) reviewing experience

Compare the bare-minimum review (the info you need in order to fairly accept or reject a submission) to the ideal review. Is there a happy medium somewhere in between?

For example, could you balance necessary written commentary with a shorter scoring sheet? Play around with your marking scheme until you find the configuration that will give the best results in a realistic amount of time.

And make sure you’re following online form best practice. For example, if you have lots of optional sections for reviewers to complete, ask yourself whether these sections are truly necessary, and if they’re not, consider scrapping them.

6. Diversity, equity and inclusion

Another thing to note is that a pool of reviewers with diversity in gender, location and career stage helps maintain scientific quality by exposing new research to a range of perspectives, but there are other benefits too. Carrying out peer review also allows researchers to improve their own writing skills by learning what good papers look like.

That’s another benefit, particularly for early-career researchers and those who don’t have much experience of publishing in conferences or journals. While more reviewer training could certainly be offered to inexperienced researchers as part of research communication and early-career training programmes, conferences can take steps to increase diversity within their own review pools.

Happy reviewers mean a smoother peer review process

At the end of the day, you need your reviewers more than they need you. So walk a mile in their shoes and make sure you’re setting up a pain-free peer review process for them.